The Future Is Now: How Speech Recognition Came To Be, And How A Tiny Audio Edge Processor Plays A Critical Role

June 18, 2019 - Sam Toba, Knowles Intelligent Audio

In a previous blog, we talked about the value of dedicated audio edge processors, namely that they enhance efficiency in voice-enabled devices, providing better performance through lower latency, lower power and improved privacy, and why Knowles AISonic™ audio edge processors are a ‘sound investment’ for these applications.

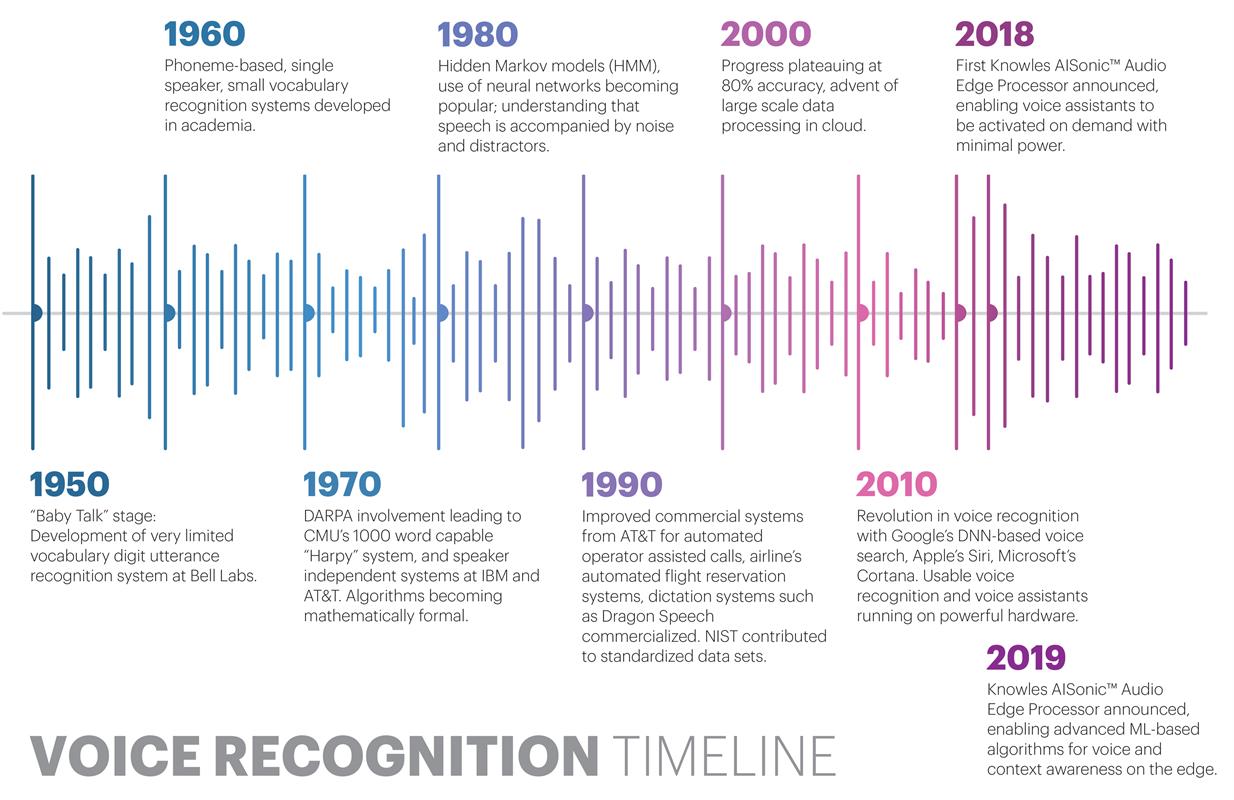

Today, we will talk about what exactly a dedicated audio edge processor can do, focusing on voice as a user interface including voice wake which makes this an ideal power-efficient solution. But before we dive into this topic, it helps to understand the history of speech recognition.

So where does an audio edge processor fit in and what does it do? One key function is voice wake-- it acts as a low power voice activated switch that keeps the power hungry application processor off until the intended keyword or command is detected. Voice wake capability is a natural progression forward from the old days when you had to unlock your phone, press a button, then say a command. The audio edge processor remains on all the time at very low power (typically in the low single digit mA range) listening for a predetermined wake word. It’s important to note that while listening, the data is continuously discarded as the loop buffer fills up, protecting user privacy. To improve accuracy, most systems will filter unwanted noise, whether it be babble or chatter from unenrolled voices, music, or other environmental noise. The keyword engine is often DNN based.

Why not have the application processor do this? It’s a fair question. In fact, in early systems, voice input was only triggered when the home button was pressed or when the adapter was plugged in to support the high power consumption of the application processor. It was only in the past few years that an effective low-power solution emerged, with the advent of very low power audio edge processors such as the Knowles AISonic IA610 SmartMic (which is a whole other story with an incredibly small DSP built inside a MEMS microphone package).

But why do you need so much processing in the voice-activated audio edge processor? It turns out that for a positive user experience, the solution must have high accuracy to detect when a user speaks the correct keyword even with divergent accents but it must also completely ignore similarly sounding words which can be very difficult to achieve. Furthermore, there are the real-life environments with people talking, TV playing, phones ringing, microwave beeping, not to mention users uttering the keyword from far away. This means extensive noise removal algorithms need to be running, including compute-intensive multiple microphone beam formers to achieve the best performance for the user. The audio edge processor offloads these tasks from the application processor and runs all of this on a very low power customized processor enabled by DSPs specifically designed to handle complex audio and AI inferencing. Only after the keyword is detected does the application processor need to wake up to process, or hand over to the cloud the rest of the command. By detecting the voice at the ‘edge of the edge’ the entire chain of voice UI is optimized in terms of power, performance and privacy.

It may seem as though the audio edge processor’s role is pretty narrow. Good news is that detecting a keyword without breaking the proverbial power bank is only the beginning. Many more tasks that require continuous monitoring of high bandwidth data are being developed on our platform by creative algorithm providers.

We are entering an era where the phone and other electronics are able to detect its environmental context. Being context aware can add to the usefulness by serving contextually relevant features. But underlying all of this is the need for low power and high compute. This seemingly contradictory requirement is achieved by our audio edge processors. A few weeks ago, we announced a new addition to our family of Knowles AISonic™ Audio Edge Processors, the IA8201, specifically designed for advanced audio and machine learning applications, enhancing power-efficient intelligence and privacy at the edge. You can learn more about the product here.

In our next blog in this series, we’ll peek under the hood and discuss in detail how high performance voice activation is achieved.